Artificial Intelligence has become so advanced that it can now create fake videos of people and even copy their voices with chilling accuracy.

This opens the door for a new wave of digital crimes. From impersonation scams to deepfake blackmail, AI is being used to deceive on an unprecedented level—and it’s already happening.

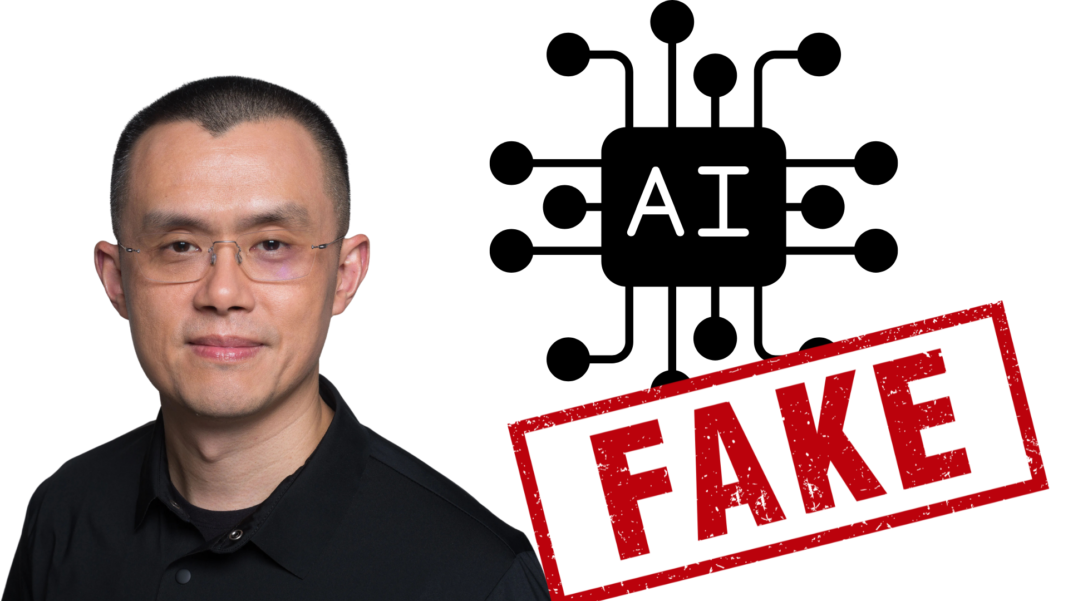

Zhao Shocked by Voice Clone

Changpeng Zhao, the former CEO of Binance, recently shared his own unsettling experience with AI on X(Twitter). In a post that quickly gained attention, he revealed that he came across a video of himself speaking Mandarin. The shocking part? He wasn’t the one talking.

According to Zhao, the voice in the video was cloned so precisely that even he couldn’t tell it was fake. It looked like him. It sounded like him. But it wasn’t him.

He called the moment “scary,” and for good reason. If someone as high-profile and tech-savvy as Zhao can be fooled, then regular people are even more vulnerable.

Voice Cloning Tools Are Too Powerful

The technology behind such videos comes from tools like ElevenLabs and Resemble.ai. These platforms can analyse voice recordings and replicate them in minutes. A few voice samples are often enough to recreate someone’s speech patterns, accent, tone, and emotion.

This is impressive but also deeply concerning. Criminals are using these tools to trick people into believing false information or to impersonate public figures for scams. Zhao’s post isn’t the only red flag.

More and more people are reporting fake calls from friends or relatives asking for money, only to realise later that it was AI-generated voice fraud. With social media offering easy access to voice and video samples, the risks are growing fast.

Crypto Scams Get More Dangerous

In the world of cryptocurrency, scams have always been a problem. But AI is taking them to a new level. In Israel, for example, the rise of AI-assisted fraud has left many digital wallet holders devastated. People are losing millions, and regulators haven’t caught up yet.

Fake investment opportunities, fraudulent exchanges, and AI-generated promotions are flooding the internet. These scams often look real and sound even more convincing. The targets are anyone with a wallet, new users, experienced traders, and even entire communities.

A Wake-Up Call?

Changpeng Zhao’s warning is more than just a personal scare. It’s a signal that the world needs to pay attention. Deepfake technology and voice cloning are no longer sci-fi. They’re here. And they’re being used for real damage.

As the tech gets better, the lies will sound even more real. People need to learn how to spot fake content. Companies must improve their security systems. And governments must move faster to regulate this space.

Also Read: Ethereum Can Pioneer A Decentralized Future For Artificial Intelligence, ETH Developer Explains